This page was generated from unit-7-optimization/01_Shape_Derivative_Levelset.ipynb.

7.1 Shape optimization via the shape derivative¶

In this tutorial we discuss the implementation of shape optimization algorithms to solve

The analytic solution to this problem is

This problem is solved by fixing an initial guess \(\Omega_0\subset \mathbf{R}^d\) and then considering the problem

where \(X:\mathbf{R}^d \to \mathbf{R}^d\) are (at least) Lipschitz vector fields. We approximate \(X\) by a finite element function.

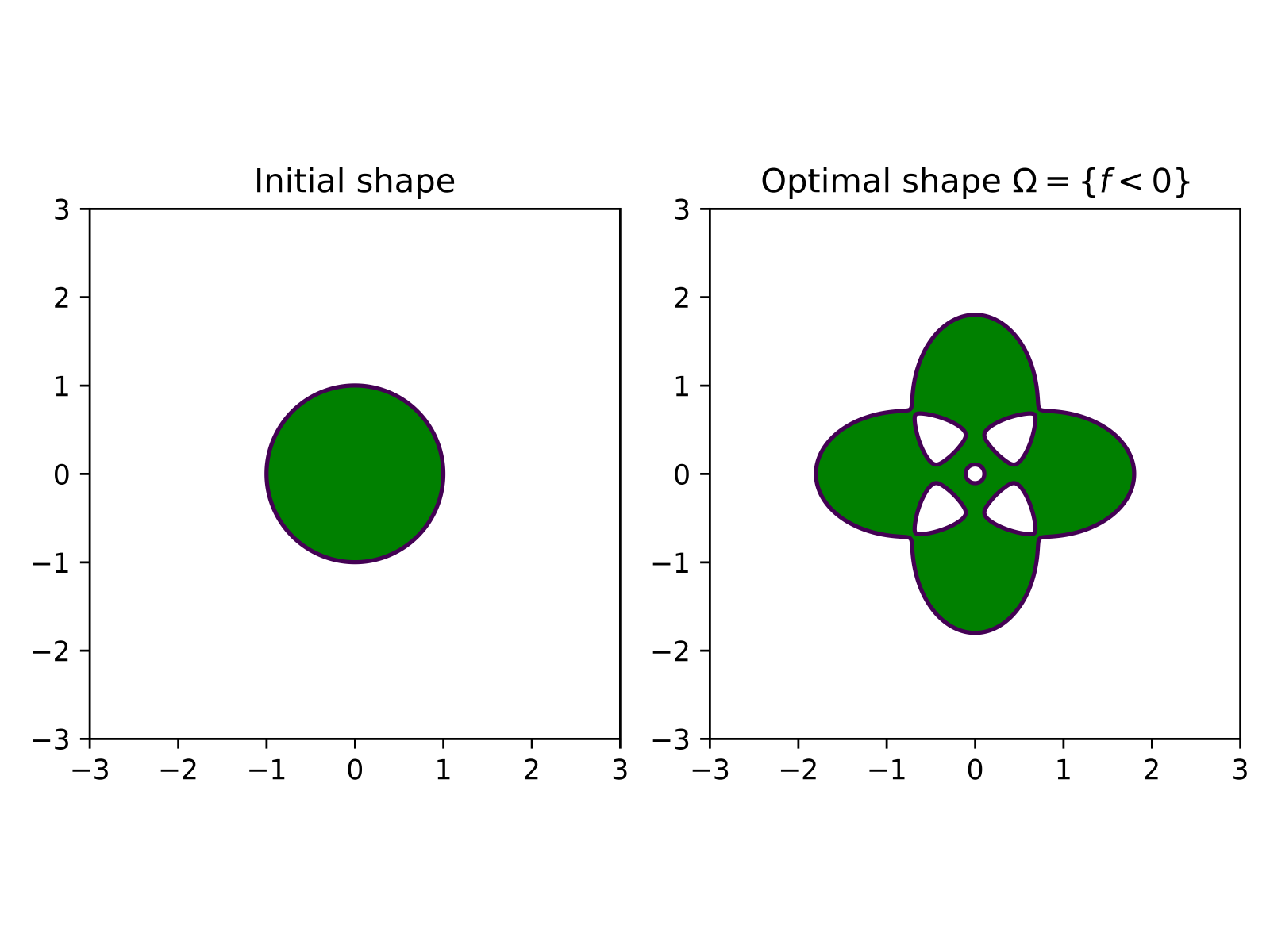

Initial geometry and shape function¶

We choose \(f\) as

where \(\varepsilon = 0.001, a = 4/5, b = 2\). The corresponding zero level sets of this function are as follows. The green area indicates where \(f\) is negative.

Let us set up the geometry.

[1]:

# Generate the geometry

from ngsolve import *

# load Netgen/NGSolve and start the gui

from ngsolve.webgui import Draw

from netgen.geom2d import SplineGeometry

geo = SplineGeometry()

geo.AddCircle((0,0), r = 2.5)

ngmesh = geo.GenerateMesh(maxh = 0.08)

mesh = Mesh(ngmesh)

mesh.Curve(2)

[1]:

<ngsolve.comp.Mesh at 0x7f8c63839e00>

[2]:

#gfset.vec[:]=0

#gfset.Set((0.2*x,0))

#mesh.SetDeformation(gfset)

#scene = Draw(gfzero,mesh,"gfset", deformation = gfset)

[3]:

# define the function f and its gradient

a =4.0/5.0

b = 2

f = CoefficientFunction((sqrt((x - a)**2 + b * y**2) - 1) \

* (sqrt((x + a)**2 + b * y**2) - 1) \

* (sqrt(b * x**2 + (y - a)**2) - 1) \

* (sqrt(b * x**2 + (y + a)**2) - 1) - 0.001)

# gradient of f defined as vector valued coefficient function

grad_f = CoefficientFunction((f.Diff(x),f.Diff(y)))

[4]:

# vector space for shape gradient

VEC = H1(mesh, order=1, dim=2)

# grid function for deformation field

gfset = GridFunction(VEC)

gfX = GridFunction(VEC)

Draw(gfset)

[4]:

BaseWebGuiScene

Shape derivative¶

[5]:

# Test and trial functions

PHI, PSI = VEC.TnT()

# shape derivative

dJOmega = LinearForm(VEC)

dJOmega += (div(PSI)*f + InnerProduct(grad_f, PSI) )*dx

Bilinear form¶

to compute the gradient \(X:= \mbox{grad}J(\Omega)\) by

[6]:

# bilinear form for H1 shape gradient; aX represents b_\Omega

aX = BilinearForm(VEC)

aX += InnerProduct(grad(PHI) + grad(PHI).trans, grad(PSI)) * dx

aX += InnerProduct(PHI, PSI) * dx

The first optimisation step¶

Fix an initial domain \(\Omega_0\) and define

Then we have the following relation between the derivative of \(\mathcal J_{\Omega_0}\) and the shape derivative of \(J\):

Here

\((\mbox{id}+X_n)(\Omega_0)\) is current shape

Now we note that \(\varphi \mapsto \varphi\circ(\mbox{id}+X_n)^{-1}\) maps the finite element space on \((\mbox{id}+X_n)(\Omega_0)\) to the finite element space on \(\Omega_0\). Therefore the following are equvalent:

assembling \(\varphi \mapsto D\mathcal J_{\Omega_0}(X_n)(\varphi)\) on the fixed domain \(\Omega_0\)

assembling \(\varphi \mapsto DJ((\mbox{id}+X_n)(\Omega_0))(\varphi)\) on the deformed domain \((\mbox{id}+X_n)(\Omega_0)\).

[7]:

# deform current domain with gfset

mesh.SetDeformation(gfset)

# assemble on deformed domain

aX.Assemble()

dJOmega.Assemble()

mesh.UnsetDeformation()

# unset deformation

Now let’s make one optimization step with step size \(\alpha_1>0\):

[8]:

# compute X_0

gfX.vec.data = aX.mat.Inverse(VEC.FreeDofs(), inverse="sparsecholesky") * dJOmega.vec

print("current cost ", Integrate(f*dx, mesh))

print("Gradient norm ", Norm(gfX.vec))

alpha = 20.0 / Norm(gfX.vec)

gfset.vec[:]=0

scene = Draw(gfset)

# input("A")

gfset.vec.data -= alpha * gfX.vec

mesh.SetDeformation(gfset)

#draw deformed shape

scene.Redraw()

# input("B")

print("cost after gradient step:", Integrate(f, mesh))

mesh.UnsetDeformation()

scene.Redraw()

current cost 63.58544060305382

Gradient norm 442.05184362948427

cost after gradient step: 5.043649318663175

Optimisation loop¶

Now we can set up an optimisation loop. In the second step we compute

by the same procedure as above.

[9]:

import time

iter_max = 50

gfset.vec[:] = 0

mesh.SetDeformation(gfset)

scene = Draw(gfset,mesh,"gfset")

converged = False

alpha =0.11#100.0 / Norm(gfX.vec)

# input("A")

for k in range(iter_max):

mesh.SetDeformation(gfset)

scene.Redraw()

Jold = Integrate(f, mesh)

print("cost at iteration ", k, ': ', Jold)

# assemble bilinear form

aX.Assemble()

# assemble shape derivative

dJOmega.Assemble()

mesh.UnsetDeformation()

gfX.vec.data = aX.mat.Inverse(VEC.FreeDofs(), inverse="sparsecholesky") * dJOmega.vec

# step size control

gfset_old = gfset.vec.CreateVector()

gfset_old.data = gfset.vec

Jnew = Jold + 1

while Jnew > Jold:

gfset.vec.data = gfset_old

gfset.vec.data -= alpha * gfX.vec

mesh.SetDeformation(gfset)

Jnew = Integrate(f, mesh)

mesh.UnsetDeformation()

if Jnew > Jold:

print("reducing step size")

alpha = 0.9*alpha

else:

print("linesearch step accepted")

alpha = alpha*1.5

break

print("step size: ", alpha, '\n')

time.sleep(0.1)

Jold = Jnew

cost at iteration 0 : 63.58544060305382

linesearch step accepted

step size: 0.165

cost at iteration 1 : -0.7382553000138545

linesearch step accepted

step size: 0.2475

cost at iteration 2 : -0.8176876285984291

linesearch step accepted

step size: 0.37124999999999997

cost at iteration 3 : -0.9325785868476248

linesearch step accepted

step size: 0.556875

cost at iteration 4 : -1.0701613164084456

linesearch step accepted

step size: 0.8353125

cost at iteration 5 : -1.1521934750329594

linesearch step accepted

step size: 1.25296875

cost at iteration 6 : -1.1547483082895464

reducing step size

reducing step size

linesearch step accepted

step size: 1.52235703125

cost at iteration 7 : -1.154955094622594

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.4982276723046877

cost at iteration 8 : -1.1550472206937452

reducing step size

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.3270326873287925

cost at iteration 9 : -1.155453494676608

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.4511102435940346

cost at iteration 10 : -1.1555977964371116

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.4281101462330694

cost at iteration 11 : -1.1556257735480633

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.4054746004152756

cost at iteration 12 : -1.1558550657345563

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.3831978279986936

cost at iteration 13 : -1.1559610148052597

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.3612741424249144

cost at iteration 14 : -1.1561635400235297

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.3396979472674797

cost at iteration 15 : -1.156193676380524

reducing step size

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.1866173613229614

cost at iteration 16 : -1.156357024476493

reducing step size

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.0510285285313932

cost at iteration 17 : -1.156434437642301

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.034369726354171

cost at iteration 18 : -1.1564637024518876

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.0179749661914572

cost at iteration 19 : -1.1564751304725112

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.0018400629773228

cost at iteration 20 : -1.156511537322834

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9859608979791321

cost at iteration 21 : -1.156564232383649

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9703334177461629

cost at iteration 22 : -1.1566297010931148

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9549536330748863

cost at iteration 23 : -1.1566995703949992

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9398176179906492

cost at iteration 24 : -1.156765520852471

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.027690565272775

cost at iteration 25 : -1.1567676713081918

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.0114016698132016

cost at iteration 26 : -1.1567784490425266

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9953709533466624

cost at iteration 27 : -1.156798428862847

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9795943237361178

cost at iteration 28 : -1.1568250029634704

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9640677537049005

cost at iteration 29 : -1.1568556930569593

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9487872798086778

cost at iteration 30 : -1.1568865442400496

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9337490014237104

cost at iteration 31 : -1.1569150632990812

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.0210545330568275

cost at iteration 32 : -1.1569198549179136

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.0048708187078768

cost at iteration 33 : -1.15692778724364

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.988943616231357

cost at iteration 34 : -1.156938362321561

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.97326885991409

cost at iteration 35 : -1.1569509591157165

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9578425484844518

cost at iteration 36 : -1.1569642145231311

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.047400826767748

cost at iteration 37 : -1.1569647976305986

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.0307995236634793

cost at iteration 38 : -1.1569668723577835

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.0144613512134133

cost at iteration 39 : -1.156971132204393

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9983821387966807

cost at iteration 40 : -1.156977460360057

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9825577818967535

cost at iteration 41 : -1.1569856919266508

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.96698424105369

cost at iteration 42 : -1.1569949177533807

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9516575408329891

cost at iteration 43 : -1.1570044550911744

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.0406375209008738

cost at iteration 44 : -1.1570056477226338

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.0241434161945948

cost at iteration 45 : -1.1570079849271222

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.0079107430479106

cost at iteration 46 : -1.1570115458839176

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9919353577706012

cost at iteration 47 : -1.1570164247726291

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9762131823499371

cost at iteration 48 : -1.1570221572034933

reducing step size

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 0.9607402034096908

cost at iteration 49 : -1.1570284025356303

reducing step size

reducing step size

reducing step size

linesearch step accepted

step size: 1.050569412428497

Using SciPy optimize toolbox¶

We use the package scipy.optimize and wrap the shape functions around it.

We first setup the initial geometry as a circle of radius r = 2.5 (as before)

[10]:

# The code in this cell is the same as in the example above.

from ngsolve import *

from ngsolve.webgui import Draw

from netgen.geom2d import SplineGeometry

import numpy as np

ngsglobals.msg_level = 1

geo = SplineGeometry()

geo.AddCircle((0,0), r = 2.5)

ngmesh = geo.GenerateMesh(maxh = 0.08)

mesh = Mesh(ngmesh)

mesh.Curve(2)

# define the function f

a =4.0/5.0

b = 2

f = CoefficientFunction((sqrt((x - a)**2 + b * y**2) - 1) \

* (sqrt((x + a)**2 + b * y**2) - 1) \

* (sqrt(b * x**2 + (y - a)**2) - 1) \

* (sqrt(b * x**2 + (y + a)**2) - 1) - 0.001)

# Now we define the finite element space VEC in which we compute the shape gradient

# element order

order = 1

VEC = H1(mesh, order=order, dim=2)

# define test and trial functions

PHI = VEC.TrialFunction()

PSI = VEC.TestFunction()

# define grid function for deformation of mesh

gfset = GridFunction(VEC)

gfset.Set((0,0))

# only for new gui

#scene = Draw(gfset, mesh, "gfset")

#if scene:

# scene.setDeformation(True)

# plot the mesh and visualise deformation

#Draw(gfset,mesh,"gfset")

#SetVisualization (deformation=True)

Generate Mesh from spline geometry

Curve elements, order = 2

Shape derivative¶

[11]:

fX = LinearForm(VEC)

# analytic shape derivative

fX += f*div(PSI)*dx + (f.Diff(x,PSI[0])) *dx + (f.Diff(y,PSI[1])) *dx

Bilinear form for shape gradient¶

Define the bilinear form

to compute the gradient \(X =: \mbox{grad}J\) by

[12]:

# Cauchy-Riemann descent CR

CR = False

# bilinear form for gradient

aX = BilinearForm(VEC)

aX += InnerProduct(grad(PHI)+grad(PHI).trans, grad(PSI))*dx + InnerProduct(PHI,PSI)*dx

## Cauchy-Riemann regularisation

if CR == True:

gamma = 100

aX += gamma * (PHI.Deriv()[0,0] - PHI.Deriv()[1,1])*(PSI.Deriv()[0,0] - PSI.Deriv()[1,1]) *dx

aX += gamma * (PHI.Deriv()[1,0] + PHI.Deriv()[0,1])*(PSI.Deriv()[1,0] + PSI.Deriv()[0,1]) *dx

aX.Assemble()

invaX = aX.mat.Inverse(VEC.FreeDofs(), inverse="sparsecholesky")

gfX = GridFunction(VEC)

Wrapping the shape function and its gradient¶

Now we define the shape function \(J\) and its gradient grad(J) use the shape derivative \(DJ\). The arguments of \(J\) and grad(J) are vector in \(\mathbf{R}^d\), where \(d\) is the dimension of the finite element space.

[13]:

def J(x_):

gfset.Set((0,0))

# x_ NumPy vector

gfset.vec.FV().NumPy()[:] += x_

mesh.SetDeformation(gfset)

cost_c = Integrate (f, mesh)

mesh.UnsetDeformation()

Redraw(blocking=True)

return cost_c

def gradJ(x_, euclid = False):

gfset.Set((0,0))

# x_ NumPy vector

gfset.vec.FV().NumPy()[:] += x_

mesh.SetDeformation(gfset)

fX.Assemble()

mesh.UnsetDeformation()

if euclid == True:

gfX.vec.data = fX.vec

else:

gfX.vec.data = invaX * fX.vec

return gfX.vec.FV().NumPy().copy()

Gradient descent and Armijo rule¶

We use the functions \(J\) and grad J to define a steepest descent method.We use scipy.optimize.line_search_armijo to compute the step size in each iteration. The Arjmijo rule reads

where \(c_1:= 1e-4\) and \(\alpha_k\) is the step size.

[14]:

# import scipy linesearch method

from scipy.optimize.linesearch import line_search_armijo

def gradient_descent(x0, J_, gradJ_):

xk_ = np.copy(x0)

# maximal iteration

it_max = 50

# count number of function evals

nfval_total = 0

print('\n')

for i in range(1, it_max):

# Compute a step size using a line_search to satisfy the Wolf

# compute shape gradient grad J

grad_xk = gradJ_(xk_,euclid = False)

# compute descent direction

pk = -gradJ_(xk_)

# eval cost function

fold = J_(xk_)

# perform armijo stepsize

if CR == True:

alpha0 = 0.15

else:

alpha0 = 0.11

step, nfval, b = line_search_armijo(J_, xk_, pk = pk, gfk = grad_xk, old_fval = fold, c1=1e-4, alpha0 = alpha0)

nfval_total += nfval

# update the shape and print cost and gradient norm

xk_ = xk_ - step * grad_xk

print('Iteration ', i, '| Cost ', fold, '| grad norm', np.linalg.norm(grad_xk))

mesh.SetDeformation(gfset)

scene.Redraw()

mesh.UnsetDeformation()

if np.linalg.norm(gradJ_(xk_)) < 1e-4:

#print('#######################################')

print('\n'+'{:<20} {:<12} '.format("##################################", ""))

print('{:<20} {:<12} '.format("### success - accuracy reached ###", ""))

print('{:<20} {:<12} '.format("##################################", ""))

print('{:<20} {:<12} '.format("gradient norm: ", np.linalg.norm(gradJ_(xk_))))

print('{:<20} {:<12} '.format("n evals f: ", nfval_total))

print('{:<20} {:<12} '.format("f val: ", fold) + '\n')

break

elif i == it_max-1:

#print('######################################')

print('\n'+'{:<20} {:<12} '.format("#######################", ""))

print('{:<20} {:<12} '.format("### maxiter reached ###", ""))

print('{:<20} {:<12} '.format("#######################", ""))

print('{:<20} {:<12} '.format("gradient norm: ", np.linalg.norm(gradJ_(xk_))))

print('{:<20} {:<12} '.format("n evals f: ", nfval_total))

print('{:<20} {:<12} '.format("f val: ", fold) + '\n')

Now we are ready to run our first optimisation algorithm:¶

[15]:

gfset.vec[:]=0

x0 = gfset.vec.FV().NumPy() # initial guess = 0

scene = Draw(gfset, mesh, "gfset")

gradient_descent(x0, J, gradJ)

Iteration 1 | Cost 63.58544060305382 | grad norm 442.05184362948427

Iteration 2 | Cost -0.7382553000138548 | grad norm 4.797321601018455

Iteration 3 | Cost -0.7857735477702836 | grad norm 4.812294575740791

Iteration 4 | Cost -0.8340109759042181 | grad norm 4.780144423275227

Iteration 5 | Cost -0.881983893823349 | grad norm 4.692239115470677

Iteration 6 | Cost -0.9285224314563473 | grad norm 4.5416445721269705

Iteration 7 | Cost -0.9723566047005454 | grad norm 4.324713174006836

Iteration 8 | Cost -1.0122540076018296 | grad norm 4.0426845384291346

Iteration 9 | Cost -1.0471892473177822 | grad norm 3.7026495061938505

Iteration 10 | Cost -1.0765050767054933 | grad norm 3.3181634341605646

Iteration 11 | Cost -1.1000167253818887 | grad norm 2.90732492212311

Iteration 12 | Cost -1.1180154448826773 | grad norm 2.490754022058955

Iteration 13 | Cost -1.1311721937086696 | grad norm 2.0883910113681714

Iteration 14 | Cost -1.140374856628482 | grad norm 1.716495522576629

Iteration 15 | Cost -1.1465562052233915 | grad norm 1.3862619992896077

Iteration 16 | Cost -1.150562263853099 | grad norm 1.1029047823734224

Iteration 17 | Cost -1.1530807854465164 | grad norm 0.8667776120942267

Iteration 18 | Cost -1.1546254652064152 | grad norm 0.6747350037169477

Iteration 19 | Cost -1.155555089885781 | grad norm 0.521601956642585

Iteration 20 | Cost -1.15610728312894 | grad norm 0.4014078557589178

Iteration 21 | Cost -1.1564329962973245 | grad norm 0.3082390241864366

Iteration 22 | Cost -1.1566250721363829 | grad norm 0.236729388003623

Iteration 23 | Cost -1.1567392179253135 | grad norm 0.18227782886365923

Iteration 24 | Cost -1.1568082424509445 | grad norm 0.14108836193150684

Iteration 25 | Cost -1.1568512059762208 | grad norm 0.11011137545386489

Iteration 26 | Cost -1.1568790758609946 | grad norm 0.08694123443763045

Iteration 27 | Cost -1.1568981259972182 | grad norm 0.06970013095634099

Iteration 28 | Cost -1.1569119356095627 | grad norm 0.05693106530543911

Iteration 29 | Cost -1.1569225483311438 | grad norm 0.04750596065756907

Iteration 30 | Cost -1.1569311374304097 | grad norm 0.040553631098362235

Iteration 31 | Cost -1.156938383007384 | grad norm 0.03540610663423864

Iteration 32 | Cost -1.1569446865801067 | grad norm 0.03155866160652364

Iteration 33 | Cost -1.1569502911220542 | grad norm 0.028637951437263578

Iteration 34 | Cost -1.156955349068707 | grad norm 0.026374404372481768

Iteration 35 | Cost -1.1569599604966179 | grad norm 0.024577686706996647

Iteration 36 | Cost -1.156964194534456 | grad norm 0.023115688255964162

Iteration 37 | Cost -1.156968101815988 | grad norm 0.021897623641615804

Iteration 38 | Cost -1.1569717214195943 | grad norm 0.020861264507975198

Iteration 39 | Cost -1.1569750846830889 | grad norm 0.019963767959634886

Iteration 40 | Cost -1.156978217692618 | grad norm 0.019175836787548774

Iteration 41 | Cost -1.1569811421514762 | grad norm 0.01847543606004201

Iteration 42 | Cost -1.1569838777622021 | grad norm 0.017849514376677995

Iteration 43 | Cost -1.1569864401815315 | grad norm 0.017283661655752045

Iteration 44 | Cost -1.1569888459059847 | grad norm 0.016770895179239378

Iteration 45 | Cost -1.156991108604262 | grad norm 0.016304608148209974

Iteration 46 | Cost -1.1569932403960685 | grad norm 0.01587952159300805

Iteration 47 | Cost -1.1569952522869715 | grad norm 0.015492096337398344

Iteration 48 | Cost -1.1569971536572254 | grad norm 0.015137178273958808

Iteration 49 | Cost -1.1569989538206762 | grad norm 0.014812385966536876

#######################

### maxiter reached ###

#######################

gradient norm: 0.014515001787420401

n evals f: 49

f val: -1.1569989538206762

L-BFGS method¶

Now we use the L-BFGS method provided by SciPy. The BFGS method requires the shape function \(J\) and its gradient grad J. We can also specify additional arguments in options, such as maximal iterations and the gradient tolerance.

In the BFGS method we replace

by

where \(H_\Omega\) is an approximation of the second shape derivative at \(\Omega\). On the discrete level we solve

[16]:

from scipy.optimize import minimize

x0 = gfset.vec.FV().NumPy()

# options for optimiser

options = {"maxiter":1000,

"disp":True,

"gtol":1e-10}

# we use quasi-Newton method L-BFGS

minimize(J, x0, method='L-BFGS-B', jac=gradJ, options=options)

RUNNING THE L-BFGS-B CODE

* * *

Machine precision = 2.220D-16

N = 7784 M = 10

This problem is unconstrained.

At X0 0 variables are exactly at the bounds

At iterate 0 f= -1.15700D+00 |proj g|= 9.63930D-04

At iterate 1 f= -1.15700D+00 |proj g|= 1.78236D-03

At iterate 2 f= -1.15703D+00 |proj g|= 1.16772D-03

At iterate 3 f= -1.15706D+00 |proj g|= 3.35736D-03

ys=-2.003E-04 -gs= 2.740E-03 BFGS update SKIPPED

At iterate 4 f= -1.15707D+00 |proj g|= 2.24781D-03

At iterate 5 f= -1.15709D+00 |proj g|= 9.32024D-04

At iterate 6 f= -1.15709D+00 |proj g|= 1.21605D-03

At iterate 7 f= -1.15709D+00 |proj g|= 1.26745D-03

At iterate 8 f= -1.15709D+00 |proj g|= 1.29862D-03

At iterate 9 f= -1.15709D+00 |proj g|= 1.33023D-03

Bad direction in the line search;

refresh the lbfgs memory and restart the iteration.

At iterate 10 f= -1.15710D+00 |proj g|= 1.52938D-03

At iterate 11 f= -1.15710D+00 |proj g|= 4.54617D-04

At iterate 12 f= -1.15710D+00 |proj g|= 4.52943D-04

At iterate 13 f= -1.15711D+00 |proj g|= 7.05745D-04

At iterate 14 f= -1.15711D+00 |proj g|= 7.05745D-04

[16]:

fun: -1.1571110014730501

hess_inv: <7784x7784 LbfgsInvHessProduct with dtype=float64>

jac: array([ 2.14530782e-06, -6.07237181e-04, 6.13396109e-04, ...,

-5.29467877e-06, 6.19934625e-06, -5.11621928e-06])

message: b'CONVERGENCE: REL_REDUCTION_OF_F_<=_FACTR*EPSMCH'

nfev: 108

nit: 14

status: 0

success: True

x: array([-5.01427824e-04, 6.98499222e-01, -6.98449755e-01, ...,

4.66153980e-03, -1.54412643e-03, 4.61902413e-03])

* * *

Tit = total number of iterations

Tnf = total number of function evaluations

Tnint = total number of segments explored during Cauchy searches

Skip = number of BFGS updates skipped

Nact = number of active bounds at final generalized Cauchy point

Projg = norm of the final projected gradient

F = final function value

* * *

N Tit Tnf Tnint Skip Nact Projg F

7784 14 108 2 1 0 7.057D-04 -1.157D+00

F = -1.1571110014730501

CONVERGENCE: REL_REDUCTION_OF_F_<=_FACTR*EPSMCH

Warning: more than 10 function and gradient

evaluations in the last line search. Termination

may possibly be caused by a bad search direction.

Cauchy time 3.357E-04 seconds.

Subspace minimization time 5.630E-03 seconds.

Line search time 2.599E+01 seconds.

Total User time 2.625E+01 seconds.

Improving mesh quality via Cauchy-Riemann equations¶

In the previous section we computed the shape gradient grad J:= X of \(J\) at \(\Omega\) via

This may lead to overly streched or even degenerated triangles. One way to improve this is to modify the above equation by

where

The two equations \(BX = 0\) are precisely the Cauchy-Riemann equations \(-\partial_x X_1 + \partial_y X_2=0\) and \(\partial_y X_1 + \partial_x X_2\). So the bigger \(\gamma\) the more angle preserving is the gradient. So by adding the B term we enforce conformaty with strengh \(\gamma\).

This only means we need to add the \(\alpha\) term to the above bilinear form aX:

[17]:

alpha = 100

aX += alpha * (PHI.Deriv()[0,0] - PHI.Deriv()[1,1])*(PSI.Deriv()[0,0] - PSI.Deriv()[1,1]) *dx

aX += alpha * (PHI.Deriv()[1,0] + PHI.Deriv()[0,1])*(PSI.Deriv()[1,0] + PSI.Deriv()[0,1]) *dx

[ ]: